ChatGPT adds GitHub repo integration

Attach a GitHub repository to Deep Research and get cited insights, architecture overviews, and TODO audits (no copy‑paste or local cloning required)

OpenAI slipped a quiet bombshell into ChatGPT this week: Deep Research can now read an entire GitHub repository as easily as it skims a Wikipedia page. The connector‑style integration turns a chat window into a full‑text search engine, static‑analysis tool, and documentation generator for any repo you authorize. It’s the strongest argument yet for doing things like code reviews in a chat interface, and it finally puts ChatGPT in the “AI‑in‑your‑codebase” competition.

What exactly landed

OpenAI calls the feature its first “connector” for Deep Research. After a one‑time GitHub OAuth flow, ChatGPT can crawl code, READMEs, and design docs inside the repo, then answer natural‑language questions with inline, source‑linked citations. Access respects GitHub permissions, and newly added repositories appear after a short indexing delay.

Availability. Team at launch; Plus/Pro over the coming days; Enterprise “soon”.

Scope. Code, Markdown, and text files only (no commit history).

Security. Content from business tiers stays out of model‑training by default.

Getting it setup

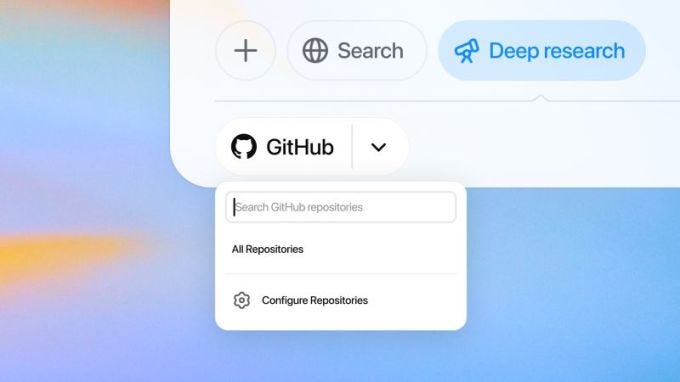

Enable Deep Research. Profile menu → Settings → Beta features → Deep Research.

Attach GitHub. Click the paper‑clip in any chat, pick GitHub, complete OAuth, and select the repos (private or public) you want ChatGPT to see.

Wait ~5 minutes for first‑time indexing if the repo list feels thin.

Ask questions. “Trace the auth flow,” “Generate a high‑level architecture diagram,” or “Show every TODO that mentions deprecation.” ChatGPT cites file paths in its answer.

Why it obliterates copy/paste workflows

Cut‑and‑paste dumps can’t compete; they break once the file changes, shred folder structure, and blow your context window.

Full‑repo context. The connector streams only the slices it needs, bypassing token‑limit gymnastics.

Live sync. Answers always hit the latest branch state; no stale snippets lingering in chat history.

Citation trail. Every claim links back to a concrete line number, removing “where did this come from?” guesswork.

Security parity. GitHub permissions gate access; you never hand‑paste proprietary code into a public LLM.

Human time saved. Early testers report onboarding docs generated “in minutes, not afternoons.”

Versus the rest

Where’s it stack up against competitors? What about other different workflows, like AI-enabled IDEs like Cursor and Windsurf?

Claude 3 + GitHub. Anthropic quietly shipped its own connector last month; UI is similar, but context is bounded by Claude’s smaller token window and lacks citations..

Replit Agent. A build‑bot that scaffolds whole apps in your workspace; great for green‑field projects, less surgical for legacy code spelunking.

Cursor and Windsurf. Full feature IDEs that allow live code edits, inline edits and refactors, tight VS Code integration, and more. While Deep Research’s GitHub integration is hard to compare to this clearly different use case, it may be a better option for when you hit a wall with Cursor’s agent.

If your priority is understanding existing code, Deep Research now owns the crown. If you need AI to type the code for you, dedicated IDE agents still win (at least for now).

Turn it on today if you’re on Plus, Pro, or Team. The setup takes seconds and the payoff in repo comprehension shows up immediately. Because the connector stays read‑only, audits, Docs‑as‑Code workflows, and cross‑functional reviews remain safe from accidental edits.

OpenAI says they are planning a steady drip of new connectors (think Jira, Figma, Notion) as it pushes Deep Research toward an all‑in‑one enterprise knowledge hub. Rivals will need wider context windows and rock‑solid citations soon or they’ll concede the code‑understanding hill to OpenAI.