o3 makes ChatGPT an agent

OpenAI just released the full o3 model (and o4-mini!); here's how the combined reasoning and tool usage is another move towards an agentic future

OpenAI just launched o3 and o4-mini, the newest models in its reasoning-centric o-series. They mark a clear shift toward tool-augmented agents, blending advanced reasoning with full access to ChatGPT’s existing toolkit.

Giving the o-series a toolkit

The o-series emphasizes reasoning depth over speed, designed to tackle complex prompts with more deliberate computation than standard GPT models.

From announcement to reality

The original o1 model demonstrated impressive reasoning capabilities, particularly in STEM fields. The o3-mini, released earlier, brought improved performance while maintaining efficiency. Now, with today's release, o3 and o4-mini take a leap forward by introducing comprehensive tool use capabilities.

These models when and how to utilize all of ChatGPT’s existing tools:

Web search for accessing up-to-date information

Python code generation and execution for data analysis

Visual reasoning for interpreting images, charts, and diagrams

Image generation capabilities

This represents an expected shift toward more agentic AI systems that can independently execute tasks on users' behalf.

Capabilities and performance

o3: Setting New Standards for AI Reasoning

o3 is OpenAI’s current reasoning flagship, outperforming prior models across various benchmarks:

Coding and software development

Mathematical problem-solving

Scientific reasoning

Visual perception and analysis

The model has achieved state-of-the-art performance on numerous benchmarks including:

Codeforces

SWE-bench (without specialized scaffolding)

MMMU (multimodal understanding)

In evaluations by external experts, o3 makes 20 percent fewer major errors than OpenAI o1 on difficult, real-world tasks, with particular excellence in programming, business/consulting, and creative ideation. Early testers highlighted its analytical rigor and ability to generate and critically evaluate novel hypotheses in fields like biology, math, and engineering.

o4-mini: Efficient reasoning at scale

OpenAI o4-mini represents a smaller, more efficient model optimized for fast, cost-efficient reasoning. o4-mini trades size for speed and throughput, but still beats o3-mini on most tasks:

On AIME 2025, o4-mini scores 99.5 percent when given access to a Python interpreter, effectively saturating this benchmark

It outperforms its predecessor, o3-mini, on non-STEM tasks and specialized domains like data science

Its efficiency supports significantly higher usage limits than o3, making it ideal for high-volume applications

Both models demonstrate improved instruction following and provide more useful, verifiable responses than their predecessors, thanks to enhanced intelligence and the inclusion of web sources. They also feel more natural and conversational, especially as they reference memory and past conversations.

The power of reinforcement learning

Training o3 involved scaling reinforcement learning to levels previously only used in pretraining. The results confirm what you'd expect: more compute, more time, better output. OpenAI has pushed an additional order of magnitude in both training compute and inference-time reasoning, and continues to see clear performance gains.

Key technical highlights include:

Training the models to use tools through reinforcement learning—teaching them not just how to use tools, but to reason about when to use them

Integration of visual reasoning directly into the chain of thought

Optimizing for cost-efficiency while maintaining high performance

Multimodal reasoning with images

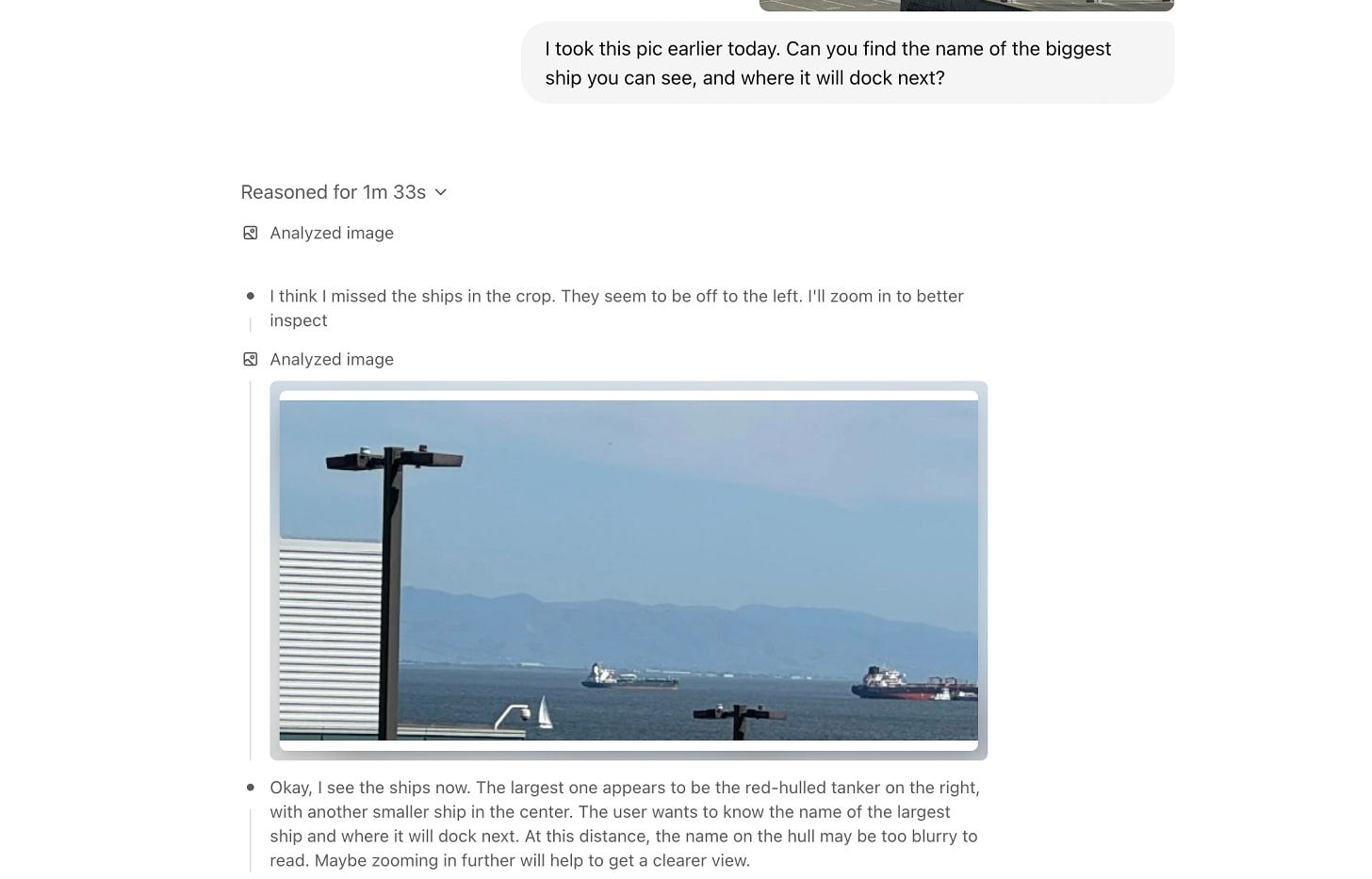

Visual content is now part of the reasoning loop. Upload a diagram, sketch, or blurry photo and o3 can interpret and act on it with the same logic it applies to text. This unlocks a new class of problem-solving that blends visual and textual reasoning.

The models can interpret these visuals (even if blurry, reversed, or low quality) and use tools to manipulate images on the fly as part of their reasoning process. This capability has resulted in best-in-class accuracy on visual perception tasks.

An agentic toolkit for ChatGPT

OpenAI o3 and o4-mini have full access to tools within ChatGPT, as well as custom tools via function calling in the API. These models are trained to reason about problem-solving approaches, choosing when and how to use tools to produce detailed and thoughtful answers in the right output formats—typically in under a minute.

For example, when asked "How will summer energy usage in California compare to last year?" the model can:

Search the web for public utility data

Write Python code to build a forecast

Generate a graph or image visualization

Explain the key factors behind the prediction

This flexible, strategic approach allows the models to tackle multi-faceted questions more effectively by:

Accessing up-to-date information beyond the model's built-in knowledge

Performing extended reasoning and analysis

Synthesizing information from multiple sources

Generating outputs across different modalities

The models can also adapt their approach as they work, searching the web multiple times with the help of search providers, evaluating results, and trying new searches if more information is needed.

The boring bits

Cost-efficient reasoning

Beyond their enhanced capabilities, o3 and o4-mini are often more efficient than their predecessors. On benchmarks like the 2025 AIME math competition, the cost-performance frontier for o3 strictly improves over o1, and similarly, o4-mini's frontier strictly improves over o3-mini.

For most real-world usage scenarios, OpenAI expects that o3 and o4-mini will be both smarter and cheaper than o1 and o3-mini, respectively, delivering better value to users.

Comprehensive protections

OpenAI rebuilt its safety dataset and stress-tested these models on its latest risk framework. Both o3 and o4-mini stay below all tracked capability thresholds for:

Biological threats (biorisk)

Malware generation

Jailbreak attempts

This refreshed data has led to strong performance on internal refusal benchmarks. Additionally, OpenAI has developed system-level mitigations to flag dangerous prompts in frontier risk areas, including a reasoning LLM monitor that works from human-written safety specifications.

Both models underwent OpenAI's most rigorous safety testing program to date, evaluated across the three tracked capability areas covered by their updated Preparedness Framework: biological and chemical, cybersecurity, and AI self-improvement. Both o3 and o4-mini remain below the Framework's "High" threshold in all three categories.

Frontier reasoning in the terminal with Codex CLI

Alongside the model releases, OpenAI has launched Codex CLI, a lightweight coding agent that runs directly from the terminal. This tool is designed to maximize the reasoning capabilities of models like o3 and o4-mini, with upcoming support for additional API models like GPT-4.1.

Codex CLI enables:

Multimodal reasoning from the command line

Processing screenshots or sketches

Direct access to local code

A minimal interface connecting models to users and their computers

The tool is fully open-source and available today at github.com/openai/codex, and likely intends to compete with Anthropic’s Claude Code. Additionally, OpenAI stated there are more agentic coding tools coming in this same vein. Look out Cursor and Windsurf!

Access and availability

Access to the new models is being rolled out across different tiers of OpenAI's products:

o3 and o4-mini replace earlier models across all paid ChatGPT tiers starting today

Enterprise and Edu get access next week

Free users can try o4-mini via the "Think" toggle

Both o3 and o4-mini are also available to developers today via the Chat Completions API and Responses API, with some developers needing to verify their organizations to access these models. The Responses API supports:

Reasoning summaries

Preserving reasoning tokens around function calls for better performance

Soon-to-be-added built-in tools like web search, file search, and code interpreter

OpenAI expects to release o3-pro in a few weeks with full tool support, while Pro users can continue to access o1-pro in the interim.

The future direction

Today's releases reflect the direction OpenAI's models are heading: converging the specialized reasoning capabilities of the o-series with more of the natural conversational abilities and tool use of the GPT-series. By unifying these strengths, future models can support seamless, natural conversations alongside proactive tool use and advanced problem-solving.

This release represents yet another step toward more agentic AI systems that can independently execute complex tasks while maintaining a natural, conversational interface. As AI continues to evolve, finding the right balance between specialized reasoning and broad applicability remains crucial, with o3 and o4-mini demonstrating substantial progress on this frontier.

Thanks for explaining in such a straightforward, yet thorough, manner

Good article