OpenAI's coding agent is here

The new Codex graduates coding bots from autocomplete to autocommit

Codex (just announced) is OpenAI’s opening bid to turn software engineering into an asynchronous, multi-agent activity.

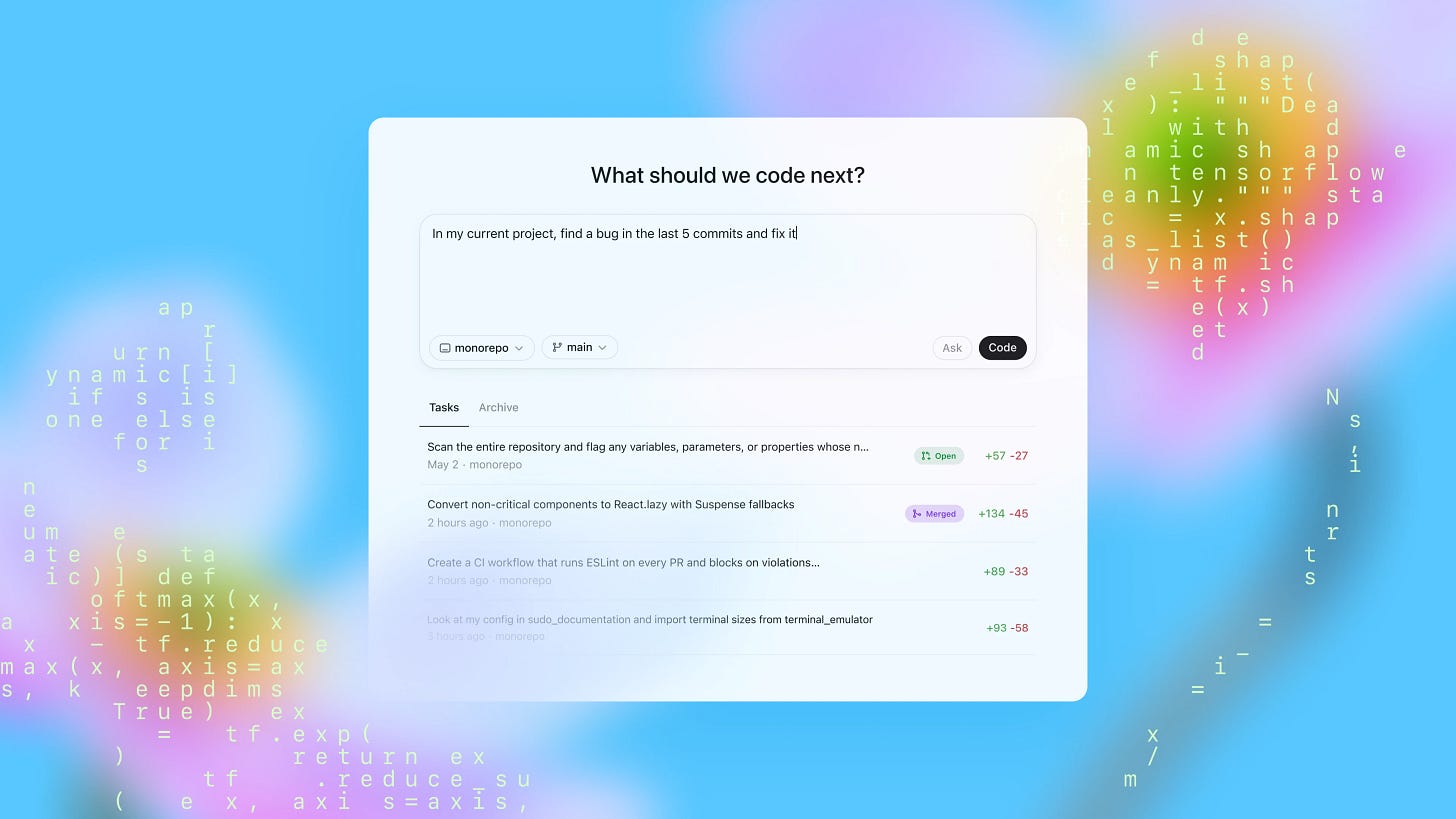

Codex appears in ChatGPT’s sidebar as a cloud-hosted agent that spins up an isolated replica of your repo, writes code, runs tests, and submits pull-requests—while you watch a progress log crawl by in real time.

Under the hood is codex-1, a variant of the o3 reasoning model fine-tuned with reinforcement learning on real bugs, real test suites, and real human pull-requests. The result: patches that look like they came from a competent teammate rather than a language model.

Codex the tool

An agent inside ChatGPT. Fire a prompt (“Refactor auth middleware”) and Codex shells into a sandbox, runs linter + unit tests, and only exits after the green bar lights up. Every terminal command and diff is cited so you can audit the run step-by-step.

AGENTS.md guidance. Drop one in your repo to teach the agent project-specific rituals (custom test runners, code style quirks, CI flags). Think of it as a programmable persistent conscience for the model (similar to .cusorrules files).

Codex CLI updates. An open-source terminal companion (

npm i -g @openai/codex) that pipes the same brains into your local workflow. It supports approval modes from suggest to full-auto, runs network-blocked, and can be scripted in CI pipelines. This was announced last month, but got model updates with this announcement.Security posture. Every task runs in a locked-down container with outbound network disabled; policy tuning makes the agent loudly refuse malware prompts.

Price and access. Free-while-it-lasts for Pro, Team, and Enterprise; API access to a trimmed

codex-mini-latestcosts $1.50/M input tokens after the honeymoon period.

Codex the model

OpenAI revealed just enough to tease the new codex-1 model powering the agent.

Max context: 192k tokens

Base: o3 family, then RL-finetuned

Training signal: Real SWE tasks, test outcomes, human-sounding PRs

That context window dwarfs the 4k token limit of the 2021 Codex lineage (12B parameters) that once powered GitHub Copilot. Bigger context lets the agent ingest an entire medium-sized codebase in one gulp, reason across hundreds of files, and still squeeze in the diff it plans to commit.

On OpenAI’s internal SWE suite, codex-1 solved tasks without any repo-specific scaffolding, outperforming vanilla o3 at style conformity and test-pass rates. Quantitative numbers remain redacted (of course), but early external testers (Cisco, Temporal, Superhuman, Kodiak) claim it already ships code that lands after one review round.

The 2021 Codex, what seems ancient at this point, once proved LLM-assisted autocomplete could shave seconds off keystrokes. codex-1 escalates from autocomplete to autocommit. OpenAI’s approach keeps review loops stay in human hands, but aims to outsource the day-to-day toil.

Competitors (Anthropic’s Claude Code, Google’s Gemini Code Assist) should worry. OpenAI’s approach stitches a full DevEx stack (ChatGPT + Agents + CLI + API) around a model whose context window covers a small monorepo.

Developers who embrace audit-driven agents will inevitably ship faster. If you ignore them, you risk spending afternoons catching up on PRs written by bots that never take coffee breaks.